Setting Up a Self-Hosted Jitsi Meet Instance with Multiple Video Bridges

Infrastructure as Code Approach

I have since adapted our instance to be provisioned with an Ansible playbook and Terraform as we only require our Jitsi Meet instance once a year. I plan to update this article to include instructions on how to achieve this.

terraform-azurerm-jitsi-meet is my Terraform module provided under the MIT licence with no guarantee or warranty. There is a basic example included in the repository to help you get started if you want to do this using Infrastructure as Code.

Eventually, I hope to publicise the Ansible role but this is currently very tightly integrated into my organisation. Until then, you should be able to deploy the Azure infrastructure using the Terraform module and then set up Jitsi Meet using the instructions in this post.

Aim

To set up a self-managed, functional and horizontally-scalable Jitsi Meet instance in the cloud, using a custom domain name. I won’t be covering authentication in this article.

By the way, I am using Microsoft Azure but this should be fairly easily applicable to any provider or even an on-premise configuration.

Getting Started

At least two servers are required for this set-up. The exact number required depends on the number of meetings and participants you are expecting to host at any one time.

Web Server Requirements

I opted to use a Microsoft Azure Standard D2s v3 instance for the Jitsi main web server.

Standard D2s v3 has the following specification:

- 2 vCPUs

- 8 GB memory

Video Bridge Requirements

For the video bridges, I decided to use Standard D4ls v5 instances because they offer more vCPU than the tier used for the main server. This is important because the video bridge is responsible for the processing of all video and audio traffic in your meetings, with the exception of P2P meetings.

Further, the number of video bridges you need directly correlates with the number of calls and participants you’d like to support. The good news - it’s very easy to add additional video bridges on-the-fly without any downtime!

Standard D4ls v5 has the following specification:

- 4 vCPUs

- 8 GB memory

Network Requirements

| Source | Destination | Ports | Protocol | Purpose |

|---|---|---|---|---|

| All (or your static IP) | All | 22 | TCP | SSH access to Jitsi servers |

| All | Jitsi Main Server | 80, 443 | TCP | HTTP/HTTPS access to the Jitsi web UI for end users |

| All | Jitsi Video Bridge Servers | 10000-20000 | UDP | RTP media traffic for audio/video feeds |

| Jitsi Video Bridge Servers | Jitsi Main Server | 5222 | TCP | XMPP client connections from JVB to JMS |

| Jitsi Main Server | Jitsi Video Bridge Servers | 9090 | TCP | Allows nginx on the Jitsi Main Server to proxy websocket requests to the Jitsi Video Bridge Servers |

| Jitsi Main Server | Jitsi Video Bridge Servers | 8080 | TCP | Optional - allows Jitsi Main Server to poll the internal Jetty web server on the Jitsi Video Bridge Servers to get metrics |

Deployment

First, provision your servers - I installed Ubuntu 22.04 Server on my Azure VMs. We will set up the Jitsi Main Server (JMS), followed by the Jitsi Video Bridge Servers (JVBs).

DNS Record

First thing’s first, you need to decide what domain you’e going to use for your Jitsi Meet

instance. In this example we will use meet.edanbrooke.com. Create a DNS address (A) record for

your domain and point it to the IP address of your Jitsi Main Server.

Install Jitsi Meet

I have taken some of these instructions from Jitsi’s self-hosting guide. Check it for any updates.

# Update your package manager cache

sudo apt update

# Install apt-transport-https to make sure we can use HTTPS repositories

sudo apt install apt-transport-https

# Enable the Ubuntu 'universe' package repository

sudo apt-add-repository universe

# Update package manager cache again now we've enabled the universe repository

sudo apt update

# Trust the Prosody APT package repository signing key

curl -sL https://prosody.im/files/prosody-debian-packages.key | sudo tee /etc/apt/keyrings/prosody-debian-packages.key

# Add the Prosody package repository to APT configuration

echo "deb [signed-by=/etc/apt/keyrings/prosody-debian-packages.key] http://packages.prosody.im/debian $(lsb_release -sc) main" | sudo tee /etc/apt/sources.list.d/prosody-debian-packages.list

# Install Lua

sudo apt install lua5.2

# Trust the Jitsi APT package repository signing key

curl -sL https://download.jitsi.org/jitsi-key.gpg.key | gpg --dearmor | sudo tee /usr/share/keyrings/jitsi-keyring.gpg

# Add the Jitsi package repository to APT configuration

echo "deb [signed-by=/usr/share/keyrings/jitsi-keyring.gpg] https://download.jitsi.org stable/" | sudo tee /etc/apt/sources.list.d/jitsi-stable.list

# Update package manager cache now Prosody and Jitsi sources have been added

sudo apt update

# Install Jitsi Meet

sudo apt install jitsi-meet

When you install Jitsi Meet you’ll be asked for the hostname of your instance.

Example: meet.edanbrooke.com. You will also be asked how you want to configure your user-facing

SSL certificate. I used LetsEncrypt because it’s the easiest method, so I recommend that you

choose LetsEncrypt too.

Check Jitsi Meet Is Working

During the installation of the jitsi-meet package, nginx will be installed. If you already have

Apache installed, the Jitsi Meet installer will instead configure Apache. Please note this post

doesn’t cover how to set this up with Apache, so use nginx if you can.

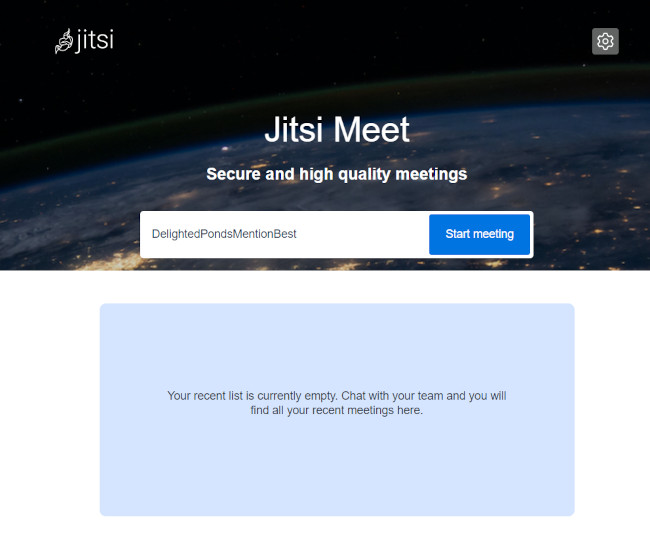

Check that you can get to your Jitsi instance by browsing to your instance domain. You should see a page similar to the below. Neat!

Everything should work at this stage and you should be able to host/join meetings. When you install the Jitsi Meet package it also installs the video bridge, which is fine for very small traffic volume and gets you up and running straight away. However, we’re expecting to host a large number of simultaneous conferences and users so we’d rather host the video bridge on dedicated servers.

Preparing Jitsi For Multiple Video Bridges

Stop and remove the Jitsi Video Bridge package from the Jitsi Main Server (JMS):

sudo /etc/init.d/jitsi-videobridge2 stop

sudo apt remove jitsi-videobridge2

Modify the nginx configuration on the Jitsi Main Server to support multiple JVB’s:

sudo nano /etc/nginx/sites-available/meet.edanbrooke.com.conf

Locate the following configuration in the file:

# colibri (JVB) websockets for jvb1

location ~ ^/colibri-ws/default-id/(.*) {

proxy_pass http://jvb1/colibri-ws/default-id/$1$is_args$args;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

tcp_nodelay on;

}

Add the following after it:

# colibri (JVB) websockets for additional JVBs

location ~ ^/colibri-ws/([0-9.]*)/(.*) {

proxy_pass http://$1:9090/colibri-ws/$1/$2$is_args$args;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

tcp_nodelay on;

}

Save the file and restart nginx: sudo /etc/init.d/nginx restart.

Your Jitsi server is now ready for multiple video bridges to connect to it.

Installing Additional Video Bridges

Repeat the below steps for each JVB you want to add to your Jitsi installation.

Note: it’s better to add more servers to the cluster (horizonally scale) than to increase the specifications of one individual server (vertically scale). This gives you more flexibility and resiliency, as well as more network bandwidth.

As with the Jitsi Main Server, we’ll add some APT sources. However, we don’t need the Prosody repository because Prosody only runs on the Jitsi main server.

# Update your package manager cache

sudo apt update

# Install apt-transport-https to make sure we can use HTTPS repositories

sudo apt install apt-transport-https

# Enable the Ubuntu 'universe' package repository

sudo apt-add-repository universe

# Update package manager cache again now we've enabled the universe repository

sudo apt update

# Trust the Jitsi APT package repository signing key

curl -sL https://download.jitsi.org/jitsi-key.gpg.key | gpg --dearmor | sudo tee /usr/share/keyrings/jitsi-keyring.gpg

# Add the Jitsi package repository to APT configuration

echo "deb [signed-by=/usr/share/keyrings/jitsi-keyring.gpg] https://download.jitsi.org stable/" | sudo tee /etc/apt/sources.list.d/jitsi-stable.list

# Update package manager cache now the Jitsi source has been added

sudo apt update

# Install Jitsi Video Bridge

sudo apt install jitsi-videobridge2

When you install the Jitsi Video Bridge, you’ll be asked for the hostname of your

Jitsi Main Server. Be sure to enter it correctly. In my case, it’s meet.edanbrooke.com.

Hosts Configuration

Your Jitsi Video Bridges (JVB’s) need to be able to access your Jitsi main server using its public hostname. Depending on your set-up, you might need to use your internal network addresses to communicate between the Jitsi servers rather than the public IP. If this is the case, you’ll need to set your video bridge servers to resolve your Jitsi hostname to the internal IP of your Jitsi Main Server using your /etc/hosts file. You could also use a split/private DNS solution, but the simplest way is to use the hosts file.

In my case, my Jitsi Main Server (JMS) has an internal IP of 10.0.0.4 in Microsoft Azure, and the

video bridges are allocated 10.0.0.5 - 10.0.0.7. I added the following line to the /etc/hosts

file on each of my Jitsi Video Bridge Servers:

10.0.0.4 meet.edanbrooke.com

Be sure to verify the connectivity from your Video Bridge Server to your Jitsi Main

Server: ping meet.edanbrooke.com.

Set Prosody Server ID

When your Jitsi Video Bridges (JVB’s) register with Prosody on the Jitsi Main Server (JMS), they provide a server ID. This needs to be unique per video bridge. It’s also used by the Jitsi Main Server to decide where to proxy websocket traffic to.

Earlier on, we added a location block to our nginx configuration on the JMS that relies on the

server-id on each of our JVB’s being set to the internal IP address of the JVB.

Edit /etc/jitsi/videobridge/jvb.conf and find the websockets section. Below

domain = "meet.edanbrooke.com:443" you should add:

server-id = "<internal IP of JVB>"

If your infrastructure is using only public IP’s and doesn’t have any internal access, it’s also safe to use the public IP of the JVB as the server ID. However, please note nginx will use this to proxy websocket traffic so if you are doing this over the Internet you’ll likely see performance issues. It’s best to use a dedicated internal network if possible.

Set Video Bridge Communicator Properties

To register with Prosody, your Jitsi Video Bridge needs to have the right configuration in

/etc/jitsi/videobridge/sip-communicator.properties.

-

Set

org.jitsi.videobridge.xmpp.user.shard.HOSTNAMEto the public hostname of your Jitsi Main Server. For example, changelocalhosttomeet.edanbrooke.com. -

Set

org.jitsi.videobridge.xmpp.user.shard.PASSWORDto the correct password. To find this password, look in the same file on your Jitsi Main Server. A password is generated automatically when the jitsi-meet package is installed. Use this password for all future video bridges, or pick a new secure password. Just make sure all the bridges have the same password.

Depending on your environment you may need to configure some NAT (network address translation) settings, too.

-

On each video bridge, set

org.ice4j.ice.harvest.NAT_HARVESTER_LOCAL_ADDRESSto the Jitsi Video Bridge Server’s private IP. -

On each video bridge, set

org.ice4j.ice.harvest.NAT_HARVESTER_PUBLIC_ADDRESSto the Jitsi Video Bridge Server’s public IP.

Once you’ve made the required changes, restart the Jitsi Video Bridge service:

/etc/init.d/jitsi-videobridge2 restart

Test Your installation

You should now be able to set up meetings again and the new Jitsi Video Bridges will be used.

If you want to validate that your meeting traffic is being routed to the correct JVB, you can use

Wireshark with the following query. Replace <my LAN IP> with the

LAN IP of your computer on your local network.

(udp.port == 10000) and (ip.src == <my LAN IP>)

Once you’re in a meeting with at least one non-P2P participant, you’ll see UDP traffic to port 10000 on one of your video bridges. If you have multiple JVB’s running, you can stop the JVB process on one of the servers and after a brief disconnection you should notice that the meeting recovers and that you are seamlessly connected to a different JVB.